Thread 'WCG: new systems download 100s of CPU work units, not possible to work all'

Message boards : Questions and problems : WCG: new systems download 100s of CPU work units, not possible to work all

Message board moderation

| Author | Message |

|---|---|

Joseph Stateson Joseph StatesonSend message Joined: 27 Jun 08 Posts: 642

|

I recently assembled a pair of windows system with WCG pre-configured as "0" share. Normally only 1 wu per cpu gets downloaded. Both systems have the older 7.16.3 boinc. Would the newer 7.20 handle this initialization correctly? I am guessing the project sees 12 threads and downloads a boatload of tasks and never notices that the share is supposed to be 0 till after the download. I end up aborting 400+ files: about 58 days of work where the deadline was only about 3 days in the first place. |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

There were a lot of changes, and bugs, round about v7.16.3 - personally, I wouldn't touch it (again) with a bargepole. v7.16.20 is much, much better - though I can't speak to the specific problem you're experiencing. BOINC projects cannot send work arbitrarily - the internet, and your router, makes that impossible. BOINC can only send work as a reply to a request your client has made. So, your first port of call is the event log, perhaps with <sched_op_debug> added to the basic list. What is your client requesting, how often, and what is it getting in return? Did those 400+ arrive in a single batch, all with the same deadline, or did they arrive in repeated batches, every two minutes, with deadlines offset by about 125 seconds? There was, and I think still is, a bug the the BOINC client which caused those repeated requests when <max_concurrent> was used in an app_config.xml file: remove optional extensions like that until you have got to know the normal behaviour of your new machines, and have allowed them to settle down into a stable state. Setting a resource share of zero implies that you intend the new machines to spend most of their time doing something else. What's that? If it's one or more other BOINC projects, have you set any optional parameters to control those, too? |

|

Send message Joined: 31 Dec 18 Posts: 330

|

I recently assembled a pair of windows system with WCG pre-configured as "0" share. Normally only 1 wu per cpu gets downloaded. I have not tried it but the following workaround should initialise the project share correctly. Download Boinc Turn off the network connection Install Boinc Go into Activity and set to suspend (active never) Restart the network connection Connect to the projects you want Set the project shares you want Go into Projects and update each project twice Go into Activity and set to normal (work according to preferences) The system should then take the zero project share and only download one task. |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

Added to which: Set "No New Tasks" at the very first moment you can after the 'attach to project' step is complete - within one or two seconds, if possible. Only allow new work again after ensuring that all project optional settings (resource share, venue, devices to use, sub-projects to run) have been successfully downloaded. |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

There was, and I think still is, a bug the the BOINC client which caused those repeated requests when <max_concurrent> was used in an app_config.xml file: remove optional extensions like that until you have got to know the normal behaviour of your new machines, and have allowed them to settle down into a stable state.I was right - that bug does still exist in the current code. But tonight, David has published #4592, which may correct it. I'll test in the morning. |

Keith Myers Keith MyersSend message Joined: 17 Nov 16 Posts: 922

|

This has been sorely needed for a long while. Glad to see it finally show up. |

|

Send message Joined: 31 Dec 18 Posts: 330

|

Added to which: Set "No New Tasks" at the very first moment you can after the 'attach to project' step is complete - within one or two seconds, if possible. Thank you 🙏 I thought that would be taken care of by suspending the project - I know it won’t pull new work if a task for that project is suspended and I extrapolated, my bad :-) |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

No probs. Many of us old-timers probably haven't added a new project for years, and forget how it goes. As soon as you do add a project, the client asks for 1 second of work, just to get things started. You have to move very quickly indeed to preempt that initial fetch. But then, the client is likely to ask again, as soon as the project backoff allows (and I've seen delays as low as 7 seconds at some projects). In the worst case scenario, that second fetch may ask for enough work to fill all available cores for your full cache setting time. |

Dave DaveSend message Joined: 28 Jun 10 Posts: 3045

|

In the worst case scenario, that second fetch may ask for enough work to fill all available cores for your full cache setting time.As opposed to the issue with CPDN where people keep clicking on <update project> and restart the back off for an hour! |

Joseph Stateson Joseph StatesonSend message Joined: 27 Jun 08 Posts: 642

|

Still having problems and I tried 7.16.20. I tried to make sure the share = 0 was recognized and configured only for Einstein instead of WCG Rebuild of old system XPS-435t with three gtx-1060 Installed win10x64 21h2 Installed all Visual C Runtime (all versions) Installed 7.16.20 and set advanced view Added Einstein (my project default is GPU and share = 0) Saw 100% appear under share and set "no new tasks" as soon as that option was enabled. After a minute or two I saw a single tasks executing and that share had gone to 0. I looked at the event log and the two GPUs that had only 3gb of memory were being ignored. I edited cc_config so that all 3 GPUs work and rebooted Next time I looked there were 3 tasks executing but there were 12 GPU tasks waiting to execute. Should have been none waiting to execute. The CPU has 12 threads. I checked but the 12 waiting tasks were all GPU tasks, none were CPU. Just checked again and only 11 are left. Eventually will get down to 0 and then will be getting 1 for each one I turn in which is correct for share=0 Two days ago I aborted over 700 WCG tasks (total of 1200 in last 2 weeks) but it was my old 7.16.3 and so I decided to try 7.16.20 on a rebuild of an old system. |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

I deliberately put one machine into the state where it was fetching the same quantum of new work every 30 seconds, and getting it, every time - so it was disregarding the new work when calculating what to fetch next time. Is that how your excess tasks arrive? I downloaded and installed the CI test build of #4592: that cured it. |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

I've dug out the log: 03-Dec-2021 19:40:10 [NumberFields@home] [sched_op] CPU work request: 5143.59 seconds; 0.00 devices 03-Dec-2021 19:40:12 [NumberFields@home] [sched_op] estimated total CPU task duration: 8445 seconds 03-Dec-2021 19:40:48 [NumberFields@home] [sched_op] CPU work request: 5450.16 seconds; 0.00 devices 03-Dec-2021 19:40:50 [NumberFields@home] [sched_op] estimated total CPU task duration: 8488 seconds 03-Dec-2021 19:41:24 [NumberFields@home] [sched_op] CPU work request: 5414.62 seconds; 0.00 devices 03-Dec-2021 19:41:26 [NumberFields@home] [sched_op] estimated total CPU task duration: 8488 seconds 03-Dec-2021 19:41:58 [NumberFields@home] [sched_op] CPU work request: 5540.89 seconds; 0.00 devices 03-Dec-2021 19:42:00 [NumberFields@home] [sched_op] estimated total CPU task duration: 8488 secondsIf that's how your 'hundreds of tasks' reached your machine, we know the answer and it's been fixed (though not yet released). If they arrived by some other mechanism, please give us the details. |

Joseph Stateson Joseph StatesonSend message Joined: 27 Jun 08 Posts: 642

|

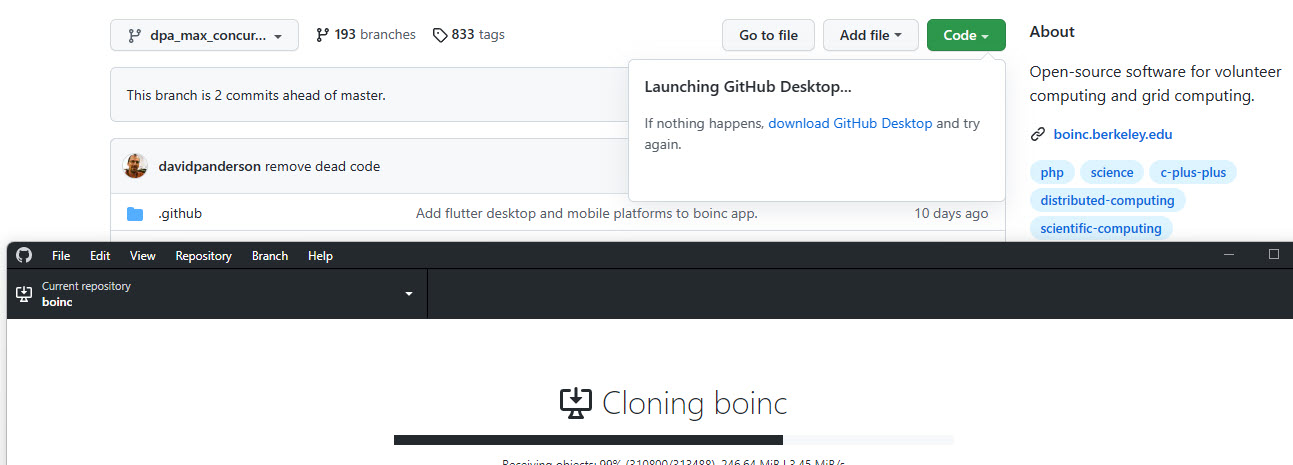

I deliberately put one machine into the state where it was fetching the same quantum of new work every 30 seconds, and getting it, every time - so it was disregarding the new work when calculating what to fetch next time. Is that how your excess tasks arrive? Doing something wrong: got the code that did not have the changes. Clicked on that 4592 issue Clicked on "dpa_max_concurrent" observed the 6 day old change at client so I think I am looking at the mod you tested selected "CODE" (the green box) and clicked on "Open with GitHub desktop" Put the download in my project folder using my GitHub desktop built using VS2019 release x64 no errors under win11 Looked at work_fetch.cpp and none of the changes were there went back and re-looked at the green box and it is downloading from github.com/BOINC/boinc.git which I suspect is not what I wanted. I am not up to speed on using github for anything more than sharing my code. Wanted to test that new boinc fix on my system as I want to enable WCG and do not want another 500+ downloads. I built 3 system in last two weeks, one for a nephew and 2 for one of my kids. I forgot about the problem on the first system and was too slow getting around to stopping the WCG downloads on the next two.  |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

I've just been having the same conversation with another user by email. So this is conveniently on my clipboard: https://drive.google.com/drive/folders/14C1sfF9wDbG1U0fPSwkXx3jq_M1HrxwB?usp=sharing You'll need both a .ZIP handler and a 7-zip handler to unpack boinc.exe - so good they compressed it twice. |

Joseph Stateson Joseph StatesonSend message Joined: 27 Jun 08 Posts: 642

|

I've just been having the same conversation with another user by email. So this is conveniently on my clipboard: ????? This must be your test hander that showed the problem I had to suspend Einstein as it was downloading days worth of data with share set to "0" which is not right. I have 151 einstein tasks waiting to run. I can actually do that as the 2 GPU are good and the deadline is not tomorrow. Why is share being set to 100%. It is shown ad 0 in the manager but 100 is listed in the log (Boinctasks log) xps-435t 1 12/7/2021 2:24:42 PM Starting BOINC client version 7.19.0 for windows_x86_64 2 12/7/2021 2:24:42 PM This a development version of BOINC and may not function properly 3 12/7/2021 2:24:42 PM Libraries: libcurl/7.80.0-DEV Schannel zlib/1.2.11 4 12/7/2021 2:24:42 PM Data directory: C:\ProgramData\BOINC 5 12/7/2021 2:24:42 PM Running under account josep 6 12/7/2021 2:24:43 PM CUDA: NVIDIA GPU 0: GeForce GTX 1060 3GB (driver version 456.71, CUDA version 11.1, compute capability 6.1, 3072MB, 2488MB available, 3936 GFLOPS peak) 7 12/7/2021 2:24:43 PM CUDA: NVIDIA GPU 1: GeForce GTX 1060 3GB (driver version 456.71, CUDA version 11.1, compute capability 6.1, 3072MB, 2488MB available, 3936 GFLOPS peak) 8 12/7/2021 2:24:43 PM OpenCL: NVIDIA GPU 0: GeForce GTX 1060 3GB (driver version 456.71, device version OpenCL 1.2 CUDA, 3072MB, 2488MB available, 3936 GFLOPS peak) 9 12/7/2021 2:24:43 PM OpenCL: NVIDIA GPU 1: GeForce GTX 1060 3GB (driver version 456.71, device version OpenCL 1.2 CUDA, 3072MB, 2488MB available, 3936 GFLOPS peak) 10 12/7/2021 2:24:43 PM All projects have zero resource share; setting to 100 11 12/7/2021 2:24:43 PM Version change (7.16.20 -> 7.19.0) why the following code in cs_statefile.cpp? // if total resource share is zero, set all shares to 1

//

if (projects.size()) {

unsigned int i;

double x=0;

for (i=0; i<projects.size(); i++) {

x += projects[i]->resource_share;

}

if (!x) {

msg_printf(NULL, MSG_INFO,

"All projects have zero resource share; setting to 100"

);

for (i=0; i<projects.size(); i++) {

projects[i]->resource_share = 100;

}

}

}Is this something that can be turned in as an issue? |

Jord JordSend message Joined: 29 Aug 05 Posts: 15729

|

You'll need both a .ZIP handler and a 7-zip handler to unpack boinc.exe - so good they compressed it twice.Or just 7-zip as it can unzip ZIPs (and RARs, and TARs, and TAR.GZs) as well. |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

why the following code in cs_statefile.cpp?I don't know, but I have traced the history. That code was introduced in https://github.com/BOINC/boinc/commit/86ccb6eed36aec51d4611869a86ce8a1066eb3c4, which is described as 'fix my last checkin'. Fortunately, I've kept a chronological listing, and I can confirm that David's immediately preceding checkin was https://github.com/BOINC/boinc/commit/f716dcf7ae828b64f1bafe9d016ca6c1aeebca4d, which is where the whole concept of a backup project was introduced. I can only presume that the implementation of 'backup project' went west if every project was backing up each other. You'd have to argue pretty strongly to reverse a supposed fix. |

|

Send message Joined: 25 Nov 05 Posts: 1654

|

Wild guess: "If All projects are set to zero, then there's no point in trying to do anything. So obviously this person doesn't know what he's doing. I'll be helpful and set them to 100% for him." |

Joseph Stateson Joseph StatesonSend message Joined: 27 Jun 08 Posts: 642

|

What I find strange is that of all the settings the user can control, the parameter that determines a project "share" is controlled at the project account and not at the boinc manager. My first thought was that setting all to %100 allowed bundled Charity Engine to start crunching on un-suspecting users who would never have a project account nor know the definition of "share". However, after reading what Richard wrote about "fix my last checkin" I decided that Hanlon's razor is applicable here I think there is a fix that does not involve adding an option to cc_config nor deleting that code. I run WUProp@home on systems that do not crunch CPU tasks so that I observe the CPU temperature that boinctasks displays. I just need to install WUProp on all new builds. It always runs at %100 and only one app ever runs. That will fix the "set all projects to %100" It just needs to be the first project added on new builds. |

|

Send message Joined: 5 Oct 06 Posts: 5150

|

Is this a new problem in #4592? I've just had a rogue fetch from GPUGrid - an NVidia GPU fetch, different from the CPU fetches that #4592 was designed to address. I have two NVidia GPUs in the machine running the #4592 artifact - a GTX 1660, and a GTX 1650. I am currently running three projects which can use NVidia GPUs: WCG/Covid, Einstein, and GPUGrid. WCG GPU tasks are short - three or four minutes. They can fit in anywhere, but are rarely available. GPUGrid tasks are looong - around 20 hours on the 1660, much longer on the 1650. And they would like their science back quickly, please - ideally within 24 hours. So the 1650 is no use, and I have 09/12/2021 14:44:10 | GPUGRID | Config: excluded GPU. Type: NVIDIA. App: all. Device: 1Einstein almost always has work, and it's of intermediate size - 15 to 25 minutes, depending on the card. So, my recent work plan has been: Fetch 6 hours of work from Einstein. Suspend the last few, so the machine is constantly ready to download more, but isn't allowed to fetch from Einstein. Script an 'update' from WCG every few minutes, so it has a chance of catching new work whenever available. Allow GPUGrid work to download when needed. Repeat three times per day. That has been working fine. I got a new GPUGrid task this morning, and it's running - about 3 hours into its 20-hour stretch. And I can see this in the Event Log: 09/12/2021 13:26:30 | GPUGRID | Sending scheduler request: Requested by project. 09/12/2021 13:26:30 | GPUGRID | Requesting new tasks for Intel GPU 09/12/2021 13:26:30 | GPUGRID | [sched_op] NVIDIA GPU work request: 0.00 seconds; 0.00 devices 09/12/2021 13:26:30 | GPUGRID | [sched_op] Intel GPU work request: 25920.00 seconds; 1.00 devices 09/12/2021 12:26:26 | GPUGRID | Scheduler request completed: got 0 new tasks(GPUGrid doesn't support Intel GPU, so that's as it should be) But it was time to refill the Einstein pot, and in the middle of that, I got 09/12/2021 14:16:19 | GPUGRID | Sending scheduler request: To fetch work. 09/12/2021 14:16:19 | GPUGRID | Requesting new tasks for NVIDIA GPU and Intel GPU 09/12/2021 14:16:19 | GPUGRID | [sched_op] NVIDIA GPU work request: 15428.76 seconds; 0.00 devices 09/12/2021 14:16:19 | GPUGRID | [sched_op] Intel GPU work request: 25920.00 seconds; 1.00 devices 09/12/2021 14:16:20 | GPUGRID | Scheduler request completed: got 1 new tasksThat shouldn't have happened (and has never happened before), because the only device GPUGrid is allowed to run on is going to be busy all night.  Any comparable observations? Should I ask David to investigate (and risk delaying the next release for another decade), or just work round it? |

Copyright © 2025 University of California.

Permission is granted to copy, distribute and/or modify this document

under the terms of the GNU Free Documentation License,

Version 1.2 or any later version published by the Free Software Foundation.